Ever wondered how your smart speaker magically perks up when you say its name, even when the Wi-Fi is down? Or how your new camera can spot a squirrel in the yard without sending a single byte of video to the cloud?

The secret isn’t always hidden away in some massive data center. More and more, the magic is happening right inside the device itself. Welcome to the wild, wonderful world of Edge AI and TinyML—where artificial intelligence gets a crash course in minimalism.

This is where AI trades its cushy server rack for a tiny chip, learning to run on a shoestring budget of power and memory. It’s the digital frontier, and things are getting exciting!

Over the next eight weeks, we’re going to pull back the curtain and decode how it all works. Think of this as your friendly field guide. Each week, we’ll tackle one big question, breaking down everything from the hardware that makes it possible to the ethical dilemmas of an “always-on” world.

Whether you’re a curious hobbyist with a Raspberry Pi, a seasoned developer looking to optimize a model, or just someone who wants to know how your smart toaster really works, you’ve come to the right place.

Strap in, get curious, and let’s dive into the tiny but mighty universe of Edge AI!

Week 1: So, Why Bother with the Edge Anyway?

Why are edge devices important in AI deployment? And what’s this TinyML thing everyone’s talking about?

Relying on the cloud for AI is like ordering pizza every night. It’s convenient, but it can be slow, costly, and what happens if the delivery driver gets lost? Edge devices are like having a master chef right in your kitchen. By processing data locally, they offer some serious perks:

- Super-Speedy Responses: For things like self-driving cars or factory robots, waiting for a signal to travel to the cloud and back is a non-starter. Edge AI slashes that latency, making instant reactions possible.

- Keeps Networks Happy: Constantly streaming raw video or sensor data can clog up networks and run up data costs. The edge processes the data first and only sends what’s important.

- Always-On Reliability: No Wi-Fi? No problem! An edge device can often keep its core AI features running, which is a game-changer for critical systems or devices in remote locations.

- Your Data Stays Your Data: This is a big one. By keeping sensitive information (like camera feeds from inside your home) on the device, edge AI is a massive win for privacy and security.

And TinyML? It’s the art of squeezing that AI chef into the smallest kitchen imaginable—think wearables, implants, and tiny sensors. Its biggest impact is putting intelligence in places we never thought possible, like a medical implant that predicts health events or a smart home that truly learns your habits to save energy.

Week 2: Meet the Contenders: A Hardware Showdown

What hardware hurdles do you face at the edge? Let’s compare Raspberry Pi, Arduino/ESP32, and Coral.

Moving AI to the edge means you can’t just throw infinite computing power at a problem. You’re working with a tight budget of processing power, memory, and electricity. Here’s a quick look at the main players and how they stack up.

Table 1: Comparison of Edge AI Hardware Options

| Aspect |

Raspberry Pi 5 |

Arduino Uno |

ESP32 |

Google Coral |

| Processor |

Cortex-A76 (2.4GHz) |

ATmega328P (16 MHz) |

Xtensa LX6 (240 MHz) |

Cortex-A53 + TPU |

| AI Accelerator |

None |

None |

None |

Edge TPU |

| Memory |

up to 16 GB |

2 KB SRAM |

520 KB SRAM |

1 GB RAM |

| Storage |

microSD, PCIe 2.0 |

32 KB Flash |

External Flash |

8 GB eMMC |

| Power |

Moderate (3-7W) |

Very Low (<1W) |

Low (~1W) |

Low-Moderate (2-5W) |

| Connectivity |

Wi-Fi 5, Ethernet, BT 5.0 |

None |

Wi-Fi, BT |

Wi-Fi, BT, USB |

| Inference Speed |

Moderate |

Limited |

Limited |

Very Fast |

| Suitability |

General Purpose |

Basic tasks |

Simple IoT |

Real-time Inference |

The Contenders’ Corner:

- Raspberry Pi: The Friendly All-Rounder. The Pi is the Swiss Army knife of edge hardware. It feels like a mini-computer, runs Linux, and lets you use familiar tools like Python. It’s fantastic for learning and prototyping. But its power consumption and lack of a dedicated AI “engine” mean it’s a jack-of-all-trades, but a master of none.

- Arduino / ESP32: The Minimalist Marathon Runners. These guys are the champions of efficiency. They sip power, making them perfect for battery-operated sensors that need to last for months. You won’t be running complex AI on them, but for simple “always-on” tasks, they are the undisputed kings. The ESP32 is the souped-up version with Wi-Fi and Bluetooth, making it a surprisingly capable little hero.

- Google Coral: The AI Specialist. When your project is AI, you bring in the specialist. The Coral is built for one thing: running machine learning models, and running them fast. Its secret weapon is the Edge TPU, a co-processor that handles the AI heavy lifting. It’s less of a general-purpose tool, but for real-time video analysis or other demanding AI tasks, the Coral is in a class of its own.

Week 3: What’s a “Lightweight” Model? (And What’s the Catch?)

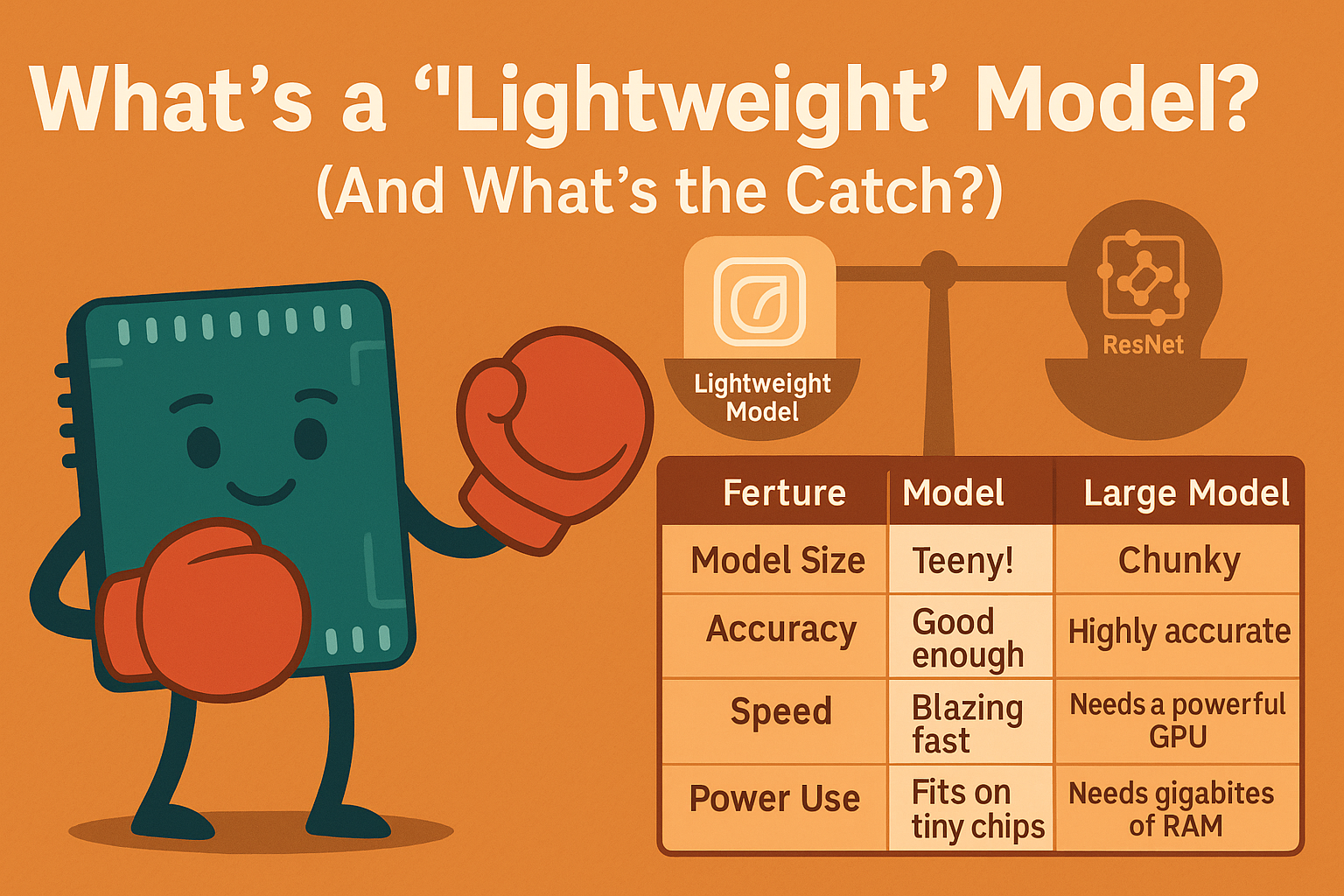

What makes a vision model “lightweight”? Let’s talk about the classic trade-off: accuracy vs. size.

A “lightweight” model is an AI that’s been on a serious diet. It’s been optimized to have fewer moving parts (parameters) so it can run on devices with tiny memories and processors. Think of models like MobileNet or Tiny-YOLO—they’re designed to be nimble and efficient.

But, as with any diet, there’s a trade-off. You can’t have it all.

| Feature |

Lightweight Model (MobileNet, Tiny-YOLO) |

Large Model (ResNet, EfficientNet) |

| Model Size |

Teeny! (< 10 MB) |

Chunky (50-500+ MB) |

| Accuracy |

Good enough for many jobs |

Highly accurate & robust |

| Speed |

Blazing fast, even on a microcontroller |

Needs a powerful GPU to be fast |

| Power Use |

Sips power |

Guzzles electricity |

| Hardware Needs |

Fits on tiny chips (< 256KB RAM) |

Needs gigabytes of RAM |

The main catch is accuracy. A smaller model just can’t learn the same amount of nuance as a giant one. But for many edge use cases—like telling a person from a pet—”good enough” is perfect, especially when the alternative is a model that’s too slow, too power-hungry, or just plain won’t fit on the device.

Week 4: Shrink-Ray for AI: The Magic of Quantization

Quantization is like giving your AI model a smaller, simpler vocabulary. Instead of using complex, high-precision numbers (like 32-bit floats), it learns to work with simpler ones (like 8-bit integers).

Why do this? Two huge reasons:

- It gets smaller: Simpler numbers take up less space, making the model easier to store and load on a tiny device.

- It gets faster: Modern chips are rock stars at handling simple math. By simplifying the numbers, you allow the hardware to process information much more quickly and with less power.

The trade-off, of course, is that with a simpler vocabulary, the model might lose a tiny bit of its “eloquence”—a slight drop in accuracy. So when do you make that trade?

- Choose Accuracy: In high-stakes fields like healthcare or finance, you need your AI to be a Nobel laureate. A small mistake could be costly, so you prioritize getting the right answer, even if it takes a moment longer.

- Choose Speed (Latency): For a voice assistant or an AR game, you need a fast-talker. Users expect instant responses. A tiny, imperceptible dip in accuracy is a small price to pay for a smooth, lag-free experience.

Week 5: The Five Great Headaches of Edge Data

What are the key challenges when collecting and preprocessing data at the edge?

Getting good data on an edge device isn’t easy. You’re trying to find gold in a river of noise, using a tiny pan, while making sure nobody steals it. Here are the biggest headaches:

- 1. A Firehose of Bits with a Thimble of Compute: Cameras and sensors can produce a tidal wave of data. Your tiny edge device, with its limited memory and processing power, has to figure out what’s important and discard the rest in real-time.

- 2. Keeping Secrets on a Tiny Chip: How do you secure sensitive data on thousands of tiny devices scattered around the world? Implementing strong encryption and privacy controls on resource-starved hardware is a massive challenge.

- 3. Wrangling Messy, Real-World Data: Sensor data is rarely clean. It comes in different formats, with different timestamps, and is often just plain noisy. The device has to clean it all up on the fly.

- 4. Squeezing Data Through a Tiny Straw: Edge devices often have slow or unreliable internet. Sending all that raw data to the cloud is a no-go, which makes on-device compression and analysis absolutely essential.

- 5. Herding Cats: The Protocol Problem: Sensors from different manufacturers often speak different languages (protocols). Getting them to sync up and work together is a constant source of frustration.

Week 6: The Holy Trinity of Real-Time Vision

What’s critical for running real-time vision tasks like object tracking or gesture recognition?

For AI vision that feels instant and reliable on an edge device, you need to nail three things. We call it the Holy Trinity:

- Latency (Is it fast enough?): This is king. If there’s a delay between an action and the system’s response, the whole experience feels broken. The model must be optimized to run at lightning speed on its specific hardware.

- Robustness (Can it handle the mess?): The real world is not a clean laboratory. A robust model can handle blurry images, bad lighting, and objects that are partially hidden, all without needing the cloud to help clean up the input.

- Efficiency (Is it a power hog?): Edge devices have a finite amount of power and memory. An efficient model sips, rather than guzzles, these resources. This often involves smart tricks, like only running the AI when motion is detected.

And the secret fourth ingredient? Context. For tracking motion or gestures, one frame isn’t enough. The model needs to see a sequence. The trick is to do this without burning through all your resources—clever models do this by reusing information between frames instead of starting from scratch every time.

Week 7: The Edge AI Report Card: How Do You Grade a Model?

You can’t just pick the “smartest” model. You need the right model for the job. To find it, you need to grade it across a few key subjects. Here’s the report card:

- Subject 1: Accuracy

- What it is: Is the model actually useful? Does it get the right answer often enough?

- How it’s graded: Using scores like Precision, Recall, and Error Rate.

- Subject 2: Latency

- What it is: Is it fast enough for a real-time experience?

- How it’s graded: By measuring Inference Time (how long a single decision takes) and Inferences Per Second (how many decisions it can make in a second).

- Subject 3: Efficiency

- What it is: Can it run without crashing the device or killing the battery?

- How it’s graded: By measuring Power Consumption (in watts), Memory Footprint (RAM/storage usage), and CPU/GPU Utilization.

Real-World Grade Point Averages (GPAs):

- Smart Doorbell: Gets an A+ in Power Efficiency because battery life is critical. A B- in Latency is perfectly fine (~500ms delay is acceptable).

- Augmented Reality Glasses: Get an A+ in Latency because any delay causes motion sickness. Power and Accuracy are B’s—important, but secondary to speed.

- Industrial Defect Camera: Gets an A+ in Accuracy and Throughput. It’s plugged into a wall, so Power Efficiency is a C—it doesn’t matter as much.

Week 8: With Great Power Comes Great Responsibility

What privacy concerns arise with always-on edge vision systems? How do we mitigate them?

An “always-on” camera or microphone raises serious privacy red flags. These devices can capture our most private moments, often without our full awareness. This isn’t just a technical problem; it’s an ethical one.

So, how do we build these amazing tools responsibly? We need a robust Privacy Playbook:

- Consent and Transparency: Be radically honest. Tell people exactly what the device is doing and get their clear permission. No hidden features, no confusing legal jargon.

- On-Device Anonymization: The best way to prevent a data leak is to not have sensitive data in the first place. Use techniques like face blurring or converting images into abstract data on the device, before anything is stored or sent.

- Airtight Security: Use strong encryption and strict access controls. Assume bad actors will try to get in and build defenses to stop them.

- Regular Audits: Don’t just set it and forget it. Regularly check your systems for vulnerabilities and ensure they comply with privacy laws like GDPR.

George Orwell’s 1984 wasn’t a prophecy about gadgets; it was a warning about unchecked power. To keep Edge AI on the right side of that line, we must internalize three rules: privacy by default, a clear understanding that capability does not equal permission, and a demand for clear, fair regulation. By doing so, we can innovate responsibly and build a future we actually want to live in.